The purpose of this article is to give a reasonable audio quality comparison of uncompressed PCM audio, DAB, DAB+, FM and AM. The broadcast formats were simulated in the manner described in the following methodology – whilst not quite as good as using full transmission modulation and demodulation chains, it is hoped that these simulations are “good enough” for comparison purposes.

The purpose of this article is to give a reasonable audio quality comparison of uncompressed PCM audio, DAB, DAB+, FM and AM. The broadcast formats were simulated in the manner described in the following methodology – whilst not quite as good as using full transmission modulation and demodulation chains, it is hoped that these simulations are “good enough” for comparison purposes.

Photo by bods

Audio Comparison Methodology

First of all a sample of music was ripped from CD using dB PowerAmp software and saved as 16bit PCM Wav file – ie uncompressed audio. Then copies of this file were processed as following to simulate the various transmission formats:

- DAB – file converted to MP2 with three formats using dB PowerAmp software. Formats selected were 80kbps Stereo, 80kbps Mono and 128kbps Stereo. These files were then converted back to PCM wav files for compatability reasons

- DAB+ – file converted to AAC HE v2 (which we call AAC+). Formats selected were 56kbps Stereo, 32kbps Stereo, 16kbps Stereo and 12kbps Stereo. These files were then converted back to PCM wav files for compatability reasons

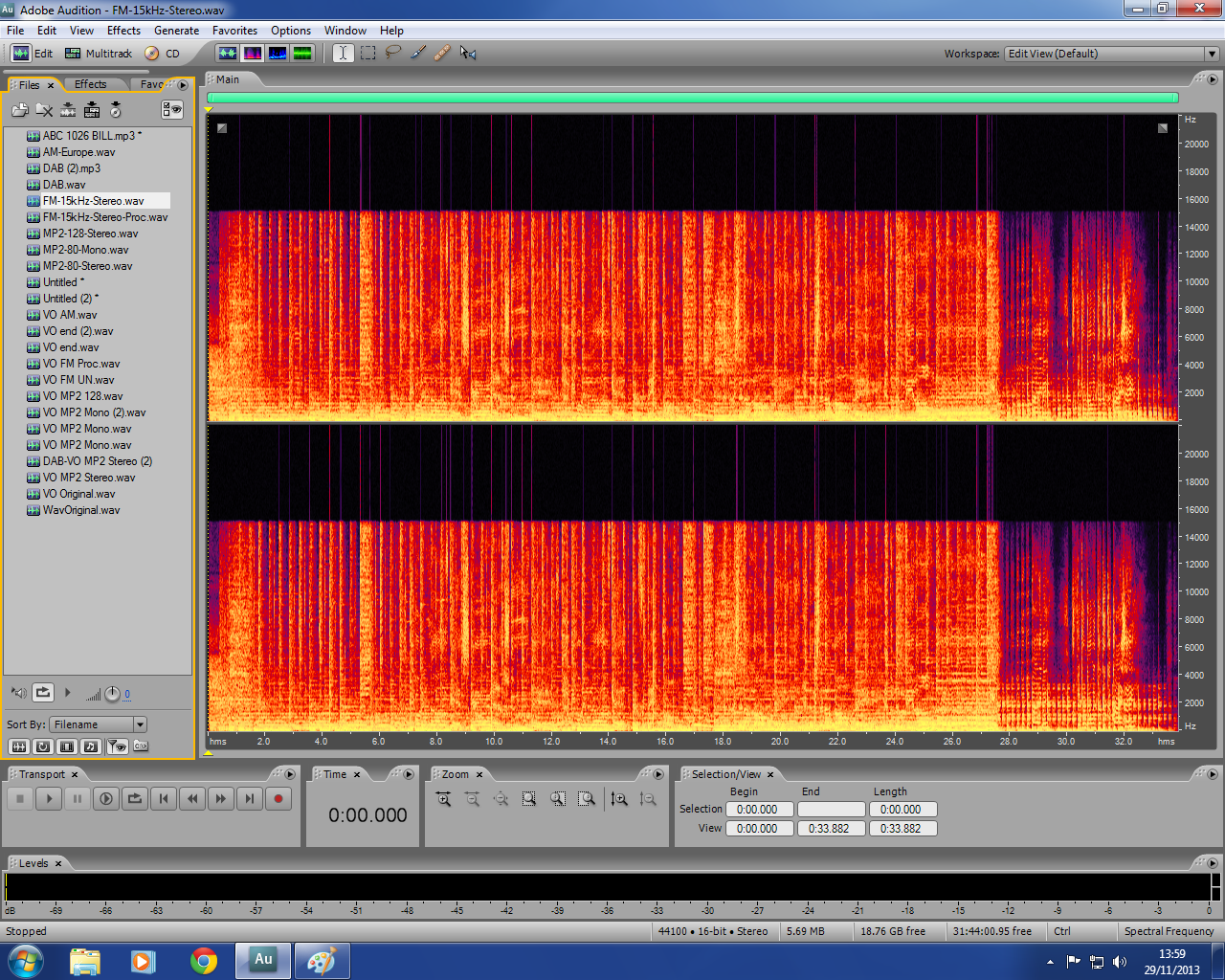

- FM – File was band-limited to 15kHz. Another version also had audio processing applied using the “Broadcast” preset in the multi-band audio processor function of Adobe Audition 3.0

- AM – File was processed using AM simulator preset on Reaper software

Associated Broadcast Consultants opinion is that 80kbps Stereo is inadequate quality, but becomes bearable at 80kbps Mono, albeit with total loss of stereo image. 128kbps MP2 seems to give a good approximation of FM quality both audibly and on the spectral charts. The AAC+ codec (used in DAB+) is remarkably good at lower bit rates – still acceptable at 32kbps, but becoming a bit “YouTubey” at 16kbps and horrible at 12kbps. However we feel it still has “something missing” at 56kbps which is the highest bitrate possible for that codec (above that it becomes normal AAC). Unsurprisingly AM sounds the worst, but this is probably exagerrated by the lack of proper AM audio processing which would make a professional AM broadcast sound much better.

Audio Quality Comparison files

The audio samples have been combined into one 6 minute comparison file. Ideally you should listen to the WAV file (123MB), but if you are in a rush and/or have poor broadband, the 320kbps MP3 file still clearly shows the difference in audio quality:-

(Just click to play or Right click and file save or save target as to download the files)

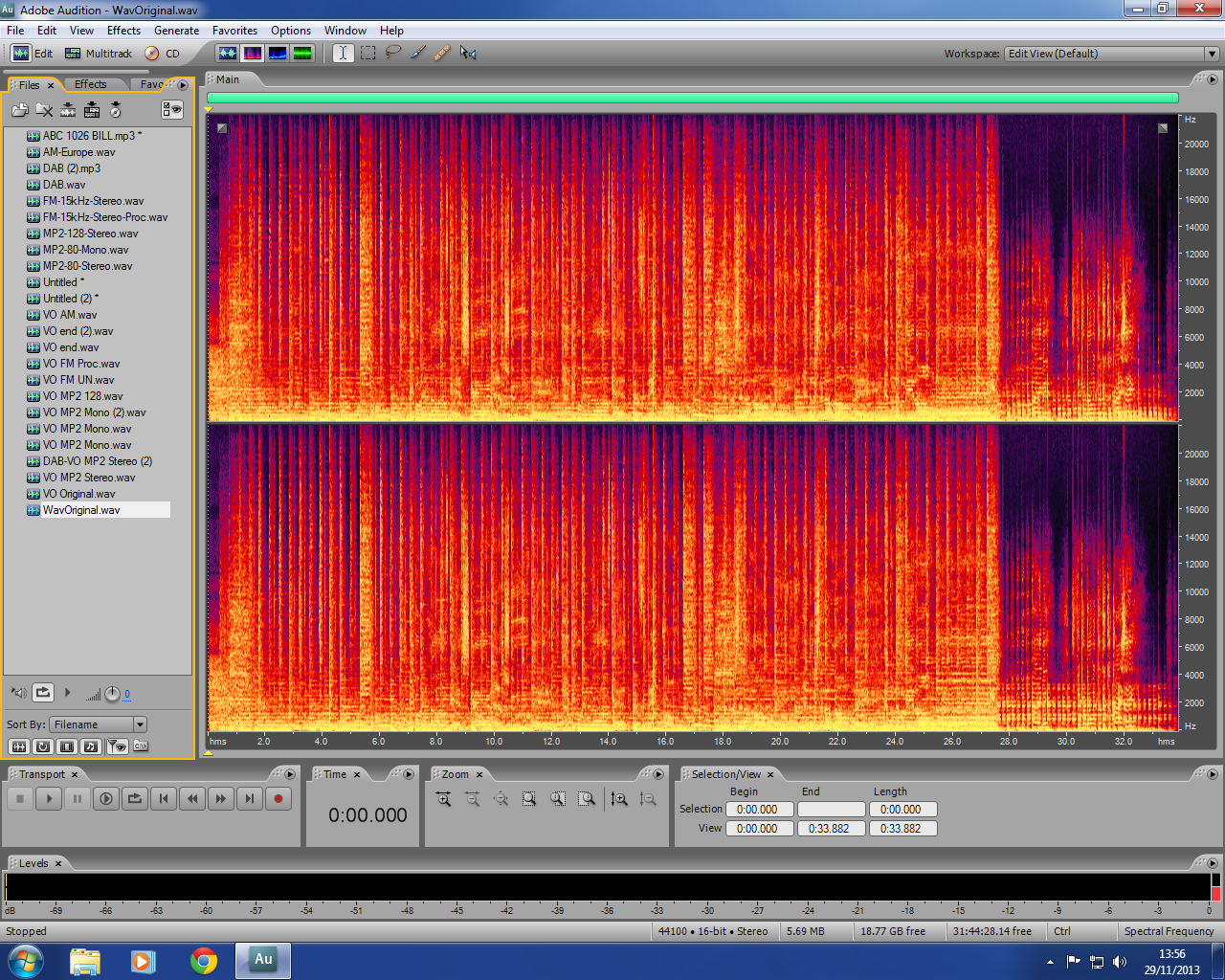

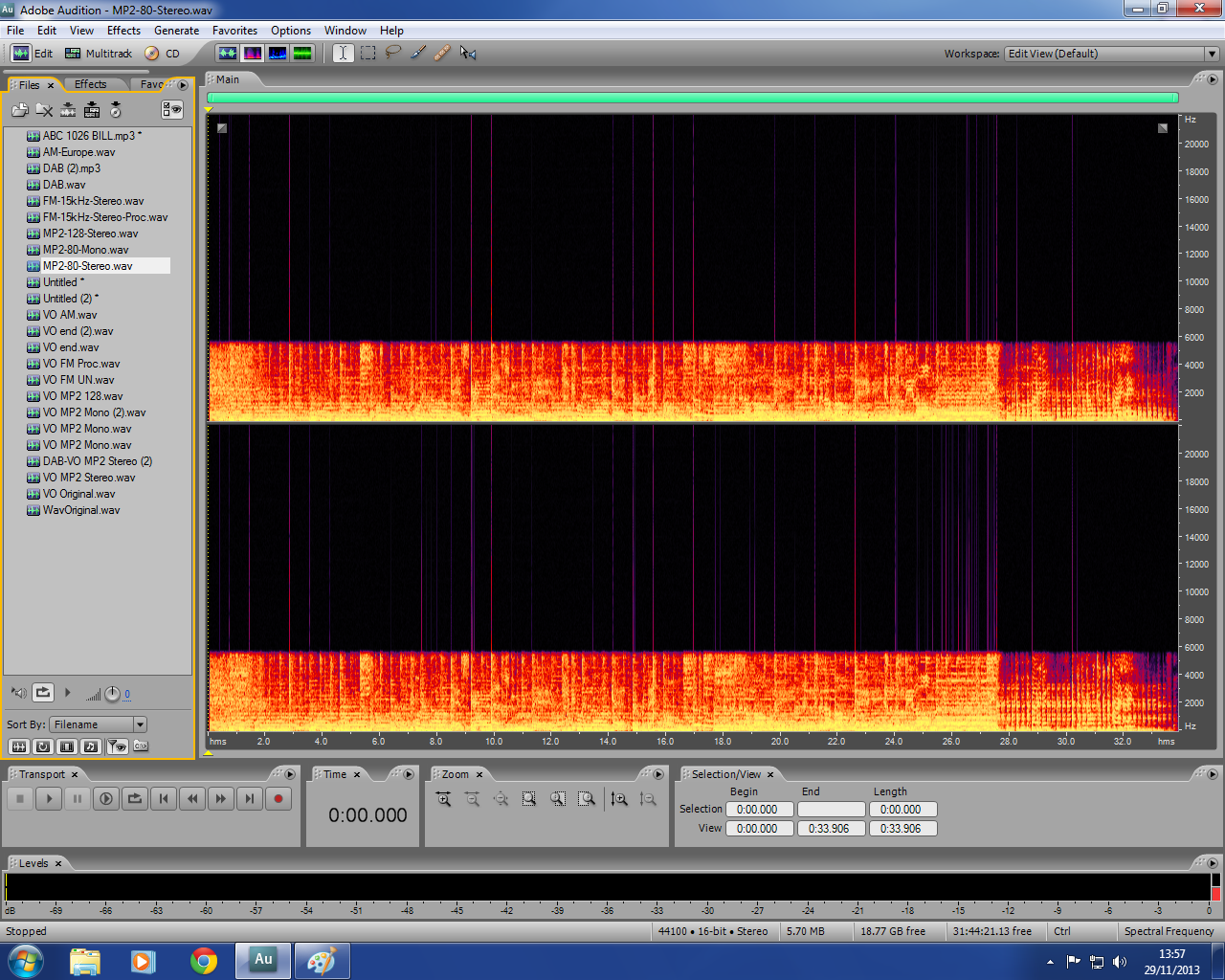

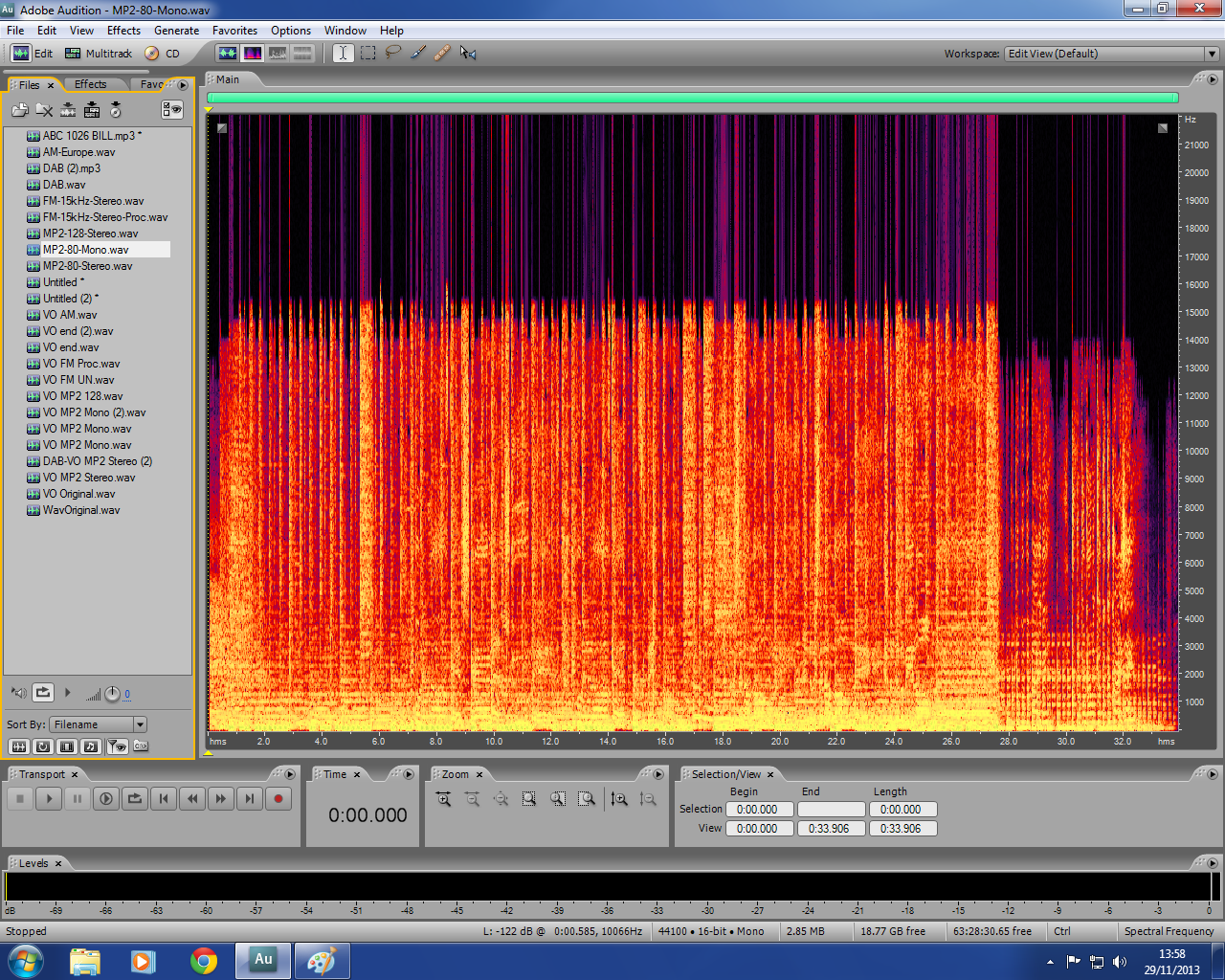

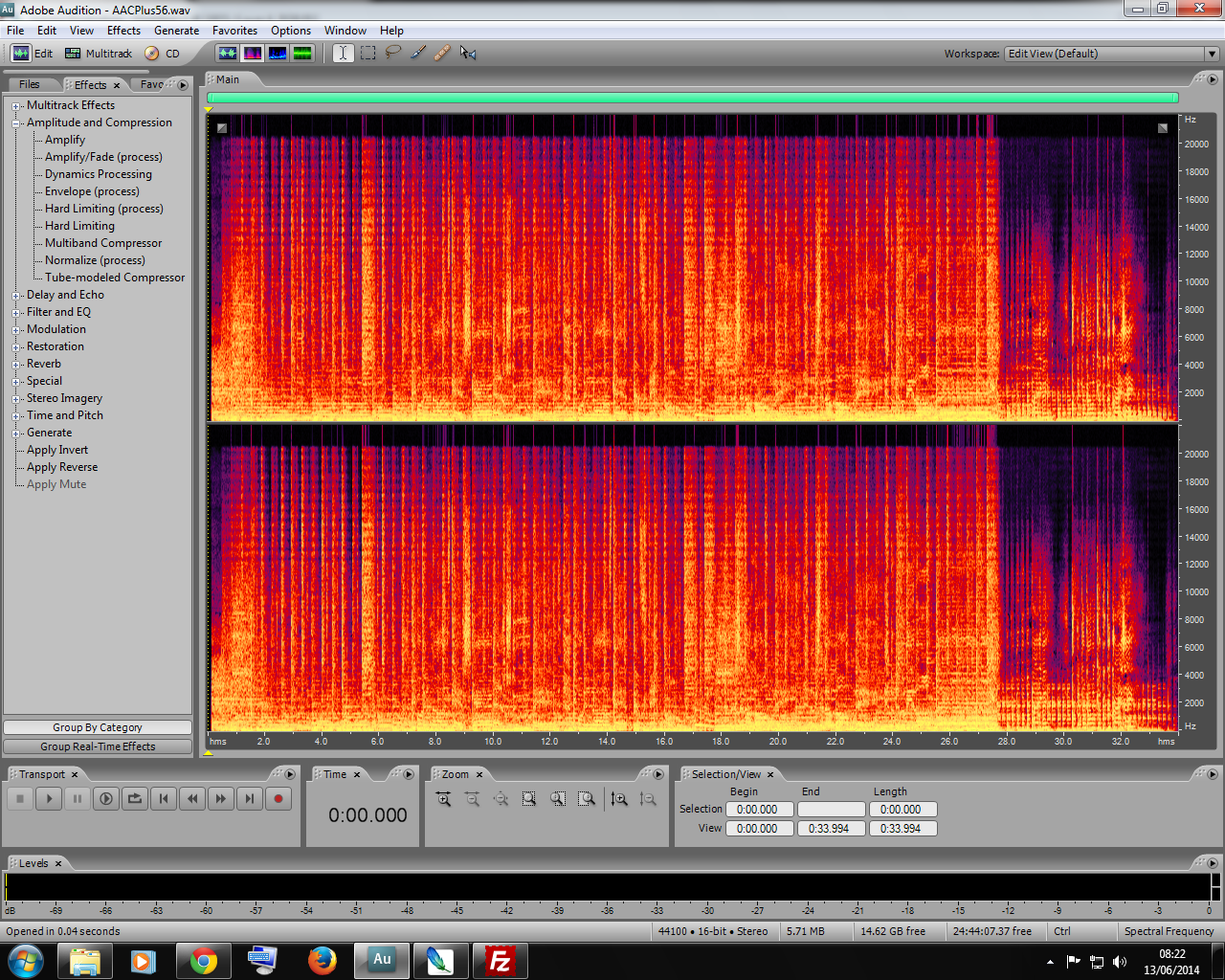

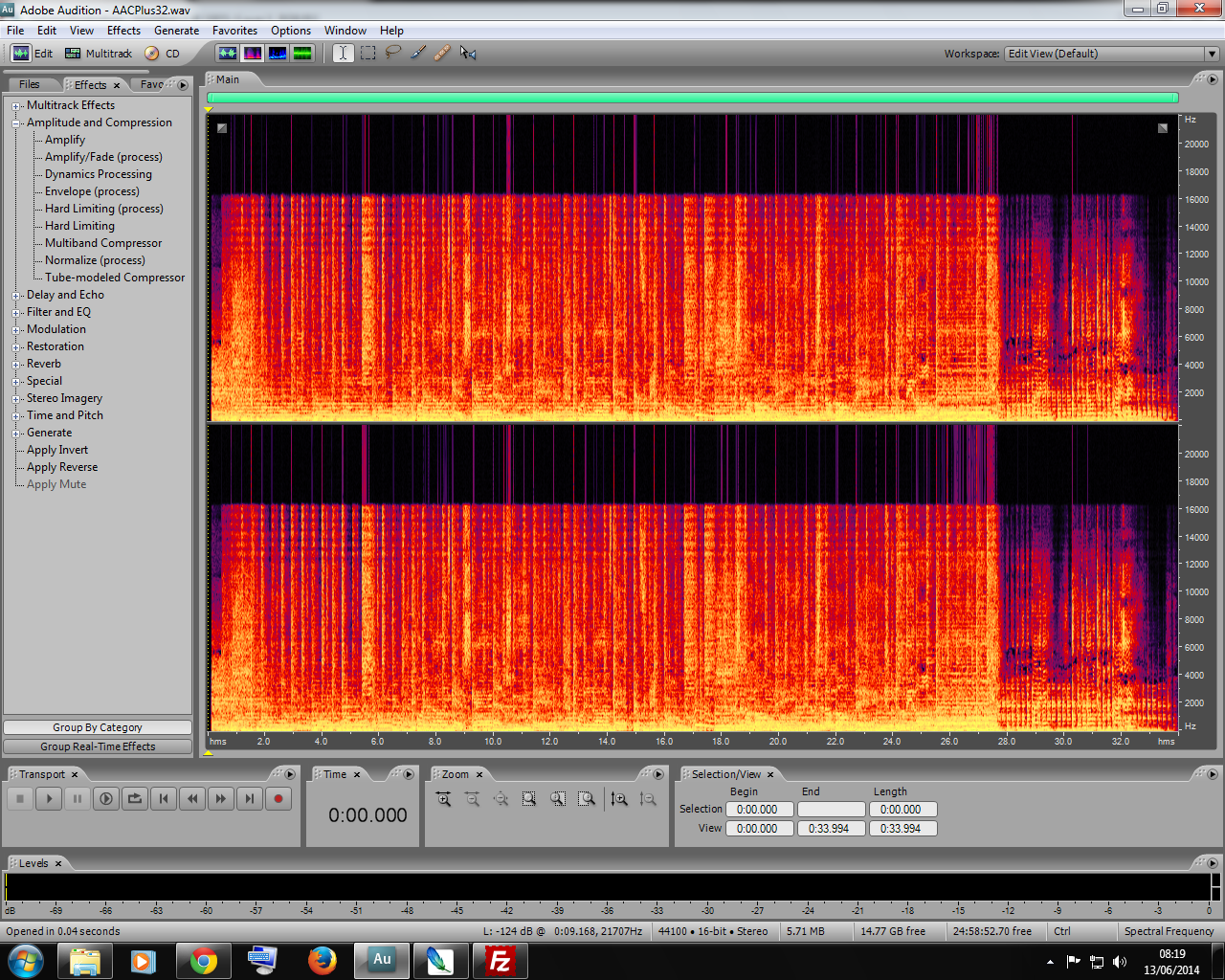

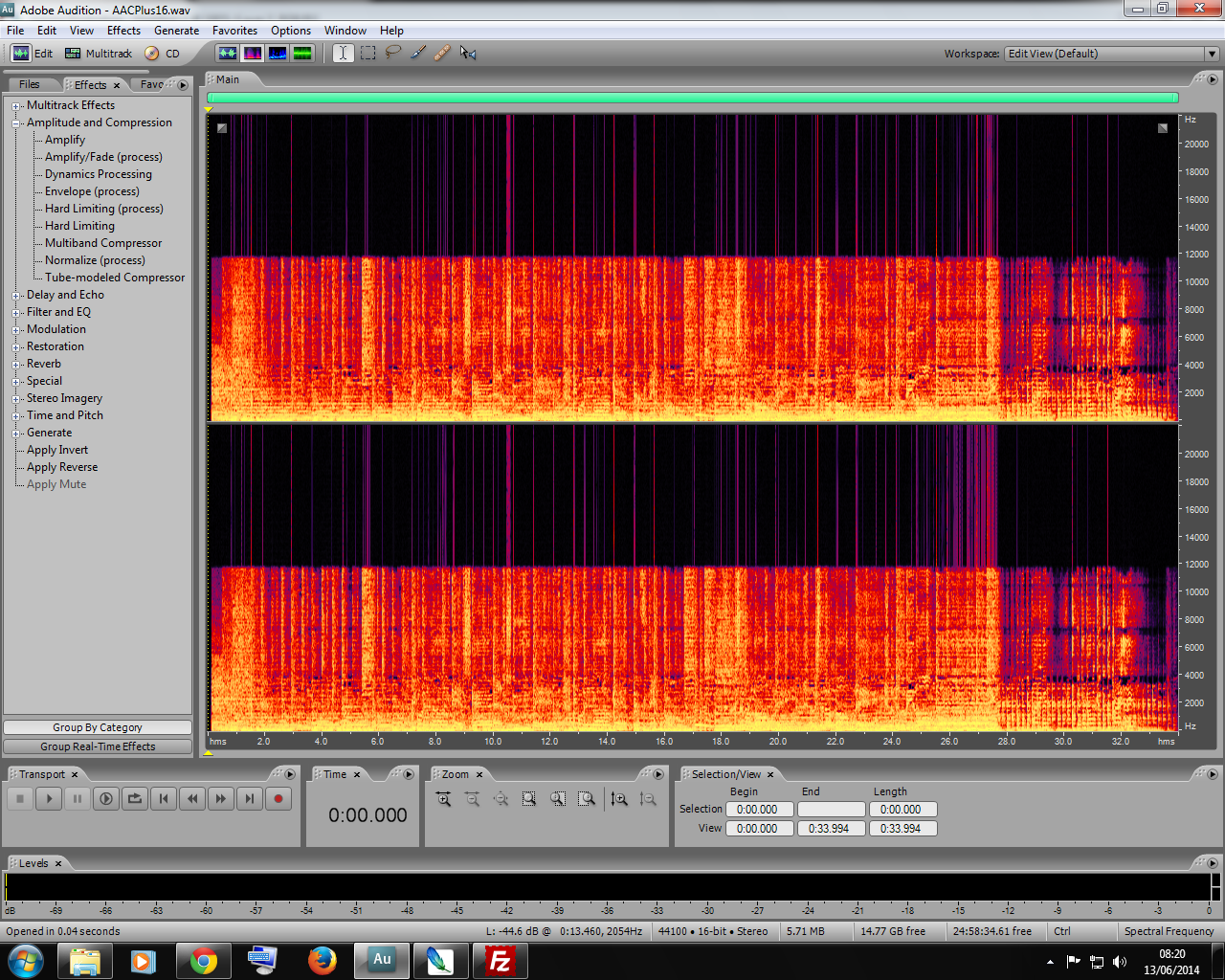

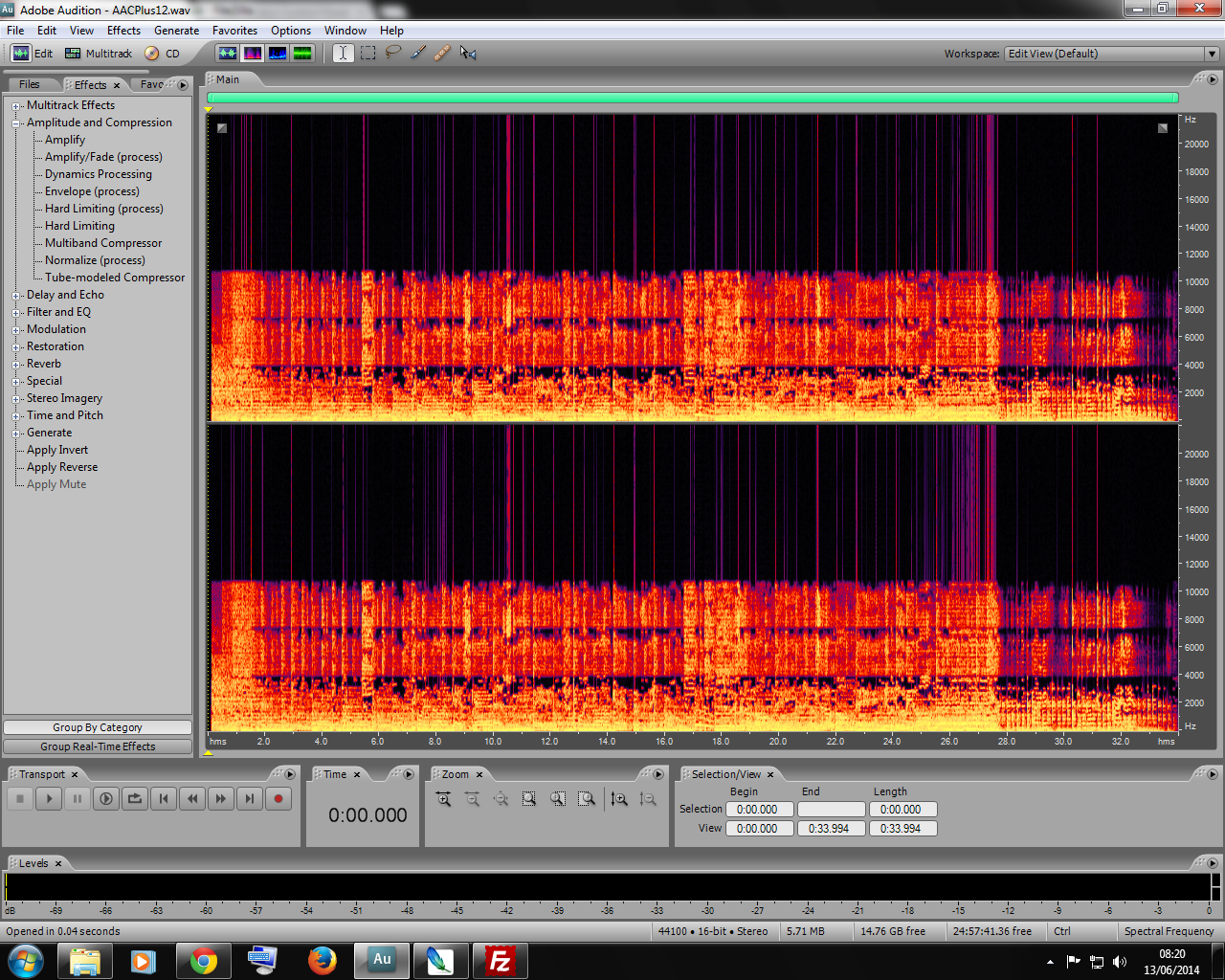

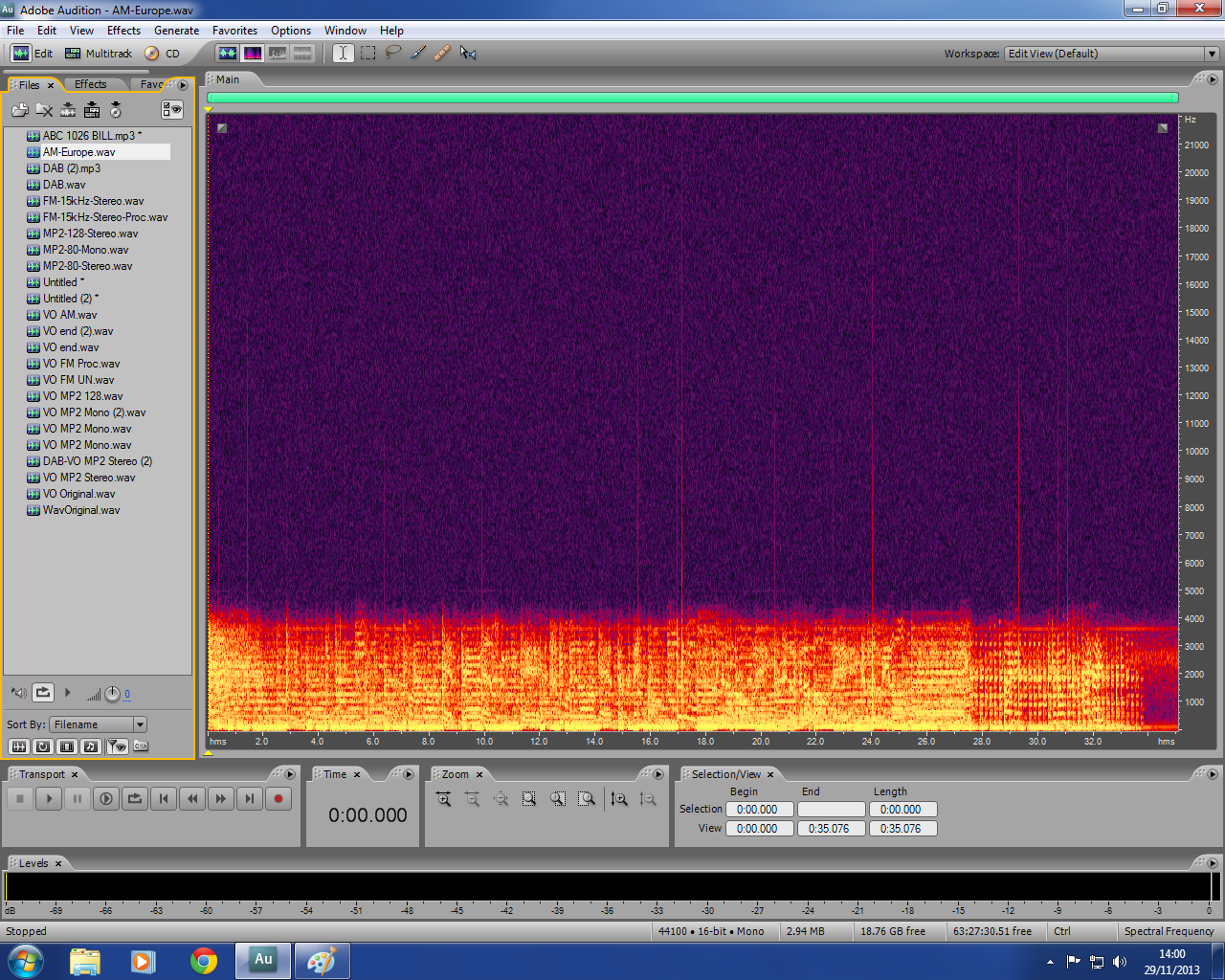

Spectral Charts

To supplement listening tests, we also did screen captures of the audio spectrum of each file. This clearly shows the band limiting of the FM file. It also shows a surprisingly heavy band-limiting in the MP2 80kbps Stereo file (almost to AM quality). In contrast the 80kpbs mono MP2 file has much better audio spectrum, and this is clearly audible on the test file. The 12kbps AAC+ picture is interesting. It appears to show aliasing – that is audio components between 0-4kHz are repeated from 4-8kHz and 8-12kHz. This could be why it sounds so bad! It could be avoided by bandlimiting the audio to 4Khz before encoding. We are not sure if this is a problem with the codec used, or a limitation of the AAC HE v2 codec specification.

Original Audio PCM WAV  MP2 80kbps Stereo

MP2 80kbps Stereo  MP2 80kbps Mono

MP2 80kbps Mono  FM Unprocessed

FM Unprocessed  FM Processed

FM Processed  DAB+ (AAC+ or AAC HE V2) – 56kbps

DAB+ (AAC+ or AAC HE V2) – 56kbps  DAB+ (AAC+ or AAC HE V2) – 32kbps

DAB+ (AAC+ or AAC HE V2) – 32kbps  DAB+ (AAC+ or AAC HE V2) – 16kbps

DAB+ (AAC+ or AAC HE V2) – 16kbps  DAB+ (AAC+ or AAC HE V2) – 12kbps

DAB+ (AAC+ or AAC HE V2) – 12kbps  AM Europe (4.5kHz)

AM Europe (4.5kHz)

Would make sense to use FLAC instead of wav.

We agree technically – but practically we believe WAV is more universally supported than FLAC – and that aspect is important on any website

Unfortunately I cannot download the files due to low bandwith connection. But I want to add some points.

The thin vertical lines in your spectra seem to be the result of clipping of the audio. If you use a original wave file going to 0 dBFS (digital fullscale) most of the time (like almost every today’s pop and rock music production does) and do any kind of psychoacoustic coding you will get artefacts. These artefacts can increase or decrease every single sample when played back. In average this will not change the audio level, but every single sample that is close to 0 dBFS can clip due to these artefacts. The lower the bitrate the higher the “overshoot”. Typical values for MP2 can be some dB when coded to 128 oder 192 kbps. Above 256 kbps the overshoots will become less important.

So if you do coding experiments please do not use wave files going to 0 dBFS. Attenuate the file prior to coding to -6 dBFS in peak level, then you will be safe.

According to years of listening experience MP2 (“the classic” DAB coding and also used on DVB-C, DVB-S and DVB-T) can deliver following results with a good codec:

96 kbps MP2 mono – very good for portable use, good for HiFi use

128 kbps MP2 joint stereo – audible artefacts in portable use, bad for HiFi use

160 kbps MP2 joint stereo – audible artefacts in portable use, but sometimes acceptable, bad for HiFi use

192 kbps MP2 joint stereo or linear stereo – no audible artefacts in portable use, good for HiFi use

256 kbps MP2 linear stereo – excellent in portable use, very good for HiFi use

320 or 384 kbps MP2 linear stereo – excellent in portable use, very good – excellent for HiFi use

However, quality at a given bitrate depends on codec settings and codec quality. There was a codec called RE660 manufactured by Barco / Scientific Atlanta in the 90s that was widely used for satellite transmission. The RE660 had a bad implementation of joint stereo mode resulting in a bad audio quality at 192 kbps (!). In linear stereo this codec was absolutely ok.

Another important pint is sound processing in radio stations. These terribly distorted and “discolored” sounds will result in a lower quality of the MP2 or AAC sound.

Germany’s public broadcasters use 320 kbps on satellite, you can hear the high quality in the cultural programmes that are transmitted without heavy sound processing. 384 kbps is used inside the broadcast houses to store audio files ready for transmission.

The new DAB+ codec AAC is more sophisticated. The quality depends on some settings.

A typical setting for stereo ist 96 kbps HE-AAC (with spectral band replication SBR). It delivers bright treble – but this is artificial above approx. 11 kHz. It sounds very clear for the 1st moment but when you listen longer you will hear that this treble is annoying. It feels like it does not belong to the original audio (and this is the truth). So old recordings from the 60s will have a extremely brilliant treble (which of course is not the truth) and speech or the voice of a (female) singer seems not to belong to the original recording.

You can use LC-AAC instead at 96 kbps, the LC codec is coding the complete frequency range and not cutting at 11 kHz and adding artificial treble instead. The result ist slightly better – but only on receivers that are capable of good-quality handling of the LC codec. Some receivers produce at 96 kbps LC-AAC a terrible sound that sounds like 32 kbps or so. Due to this some broadcasters in Germany decided to go back to the HE codec with artificial treble.

LC-AAC is delivering high quality on all receivers at bitrates of 128 kbps or higher. This is sometimes used for cultural programmes.

The typical “low cost” setting 72 or 88 kbps HE-AAC sounds terrible and any good FM reception will sound better. This is particularly the case when the “net” bitrate is significantly lower than the “gross” bitrate because of additional transmitted pictures etc.

My private preference:

MP2 above 256 or 320 kbps > MP2 at good coded 192 kbps = good FM reception > MP2 at 160 kbps joint stereo = LC-AAC at 144 or 128 kbps > LC-AAC at 128 kbps > HE-AAC at 96 kbps . All with less bitrate is not acceptable.

There is not enough spectrum to make Layer 2 sound good and AAC+ sounds (to me at least) hollow and unsatisfying at the kind of bit rates (32-64) the AAC+ broadcasters are using. It’s a race to the bottom.

I would be happy if DAB+ were to use standard AAC-LC at 128k for stereo and 64k for mono.

“Not enough spectrum” is a nonsense. There’s not enough spectrum *for the number of stations they want to cram in*, is the thing. We’ve seen exactly the same with Freeview TV, though thankfully with the digital switchover that’s cleared up a little now (though the one DVB-T2 multiplex is only being used for a few HD simulcasts and a single sub-full-SD +1 channel) and it’s nowhere near as bad as some satellite transmissions apparently get.

Basically it’s reached saturation point with the number of channels and they already have to pad out the listings with loads of shopping and adult stations to make good on their claim of having more than 100… probably half of the total I’d be surprised if they have more than a few dozen viewers at any given point through the day and that bandwidth could be happily freed up, but at this point all it would really mean is a few more stations going from 528 pixel width to 720…

Somehow the idea that the same issue might affect DAB and there could simply be massive over proliferation, or possibly there’s just no need to repeat every single local BBC station everywhere in the country doesn’t seem to have struck them yet, so they keep on cramming ’em in…

Also, if I’ve done the maths on the available bandwidth correctly, there’s like 150 to 200% as much available for DAB as for analogue FM, and the nature of the digital transmission is that something like a 200% usage efficiency is claimed as the signal has a tighter rolloff and the multiplexing allows much more carefully planned frequency re-use. So even without the intrinsically better “modulation” efficiency of lossy-compressed digital (eg, how you can stream ~90db dynamic range audio in mono at the (pre-encode bandpassed) equivalent of 16khz (mp1) or 24khz (mp2), plus stereo at 24khz (mp3) or 48khz (mp4/aac) down an ordinary phone line otherwise only good for roughly 8khz mono with about 45db of dynamic range with an analogue or linear PCM signal…), there’s 4x as much space there. If we allow for simulcasts of all existing FM stations in an area, that still leaves space for an additional 3x as many. And in my local transmission zone I can count off about… hang on… *takes off socks*… somewhere between 12 and 15 stations depending on exactly where you are and how good your aerial is. Which means an additional 36 to 45 stations – all of which should be good for, if we assume their analogue cousins are 15khz stereo and 60 to 70-something db (so about 5-6x the bandwidth), 48khz stereo with hardly any detectable distortion, in mp2.

Quite how you really need more than 48~60 radio stations available in one local area (more, if you include any that aren’t duplicated from FM to DAB, as well as LW, MW, SW…), and why any of them dip below CD quality, really baffles me. If there’s more than that many squeezed into the airspace around here, it seems really excessive. If there’s less than that, and they still sound rubbish in mp2, let alone mp4, where the heck is the bandwidth going?

Even with the much more conservative estimate of about 1500khz / 1500kbit (can’t remember which, I would expect the former should translate to more like 12000kbit with typical 256-symbol quadrature encoding…), which is all of 1.5mhz, ie the space between 88.0 and 89.5mhz or similar on a normal dial (generally you don’t see analogue stations any closer than 0.6 to 0.8mhz, so call it 2 to 3 stations’ worth … back-converting, though, it might be a mere 100khz, or a single step on a typical PLL tuner, so nothing more than the bandwidth of a single mono analogue station), that’s good for about 12 stations at 128kbit each (well OK, that’d be 1536, and doesn’t allow for error correction, so call it 10 x 128 and 2 x 64 for 1408kbit raw) on a single fairly narrow multiplex. And I’m sure when I last broke out the cheap and nasty Lidl DAB radio it showed at least a few different centre frequencies depending on what station it was gamely trying to decipher. So there should be room for the previously considered 3 or 4 dozen if not more.

And every talk/speech, low-rent “community” mono music, or upgraded-from-AM station that can get away with less than 128k means an upgrade for some other station towards 192k or more. 128k – 96k (good quality mono) = 32k… 128+32 = 160k (ehhhh quality stereo). 128-64 (ehh quality mono) = 64k, 128+64 = 192k (ok quality stereo)… (80k = ok mono…). It should work fine, so why the heck doesn’t it?

Heck, I reckon the dBpoweramp encoder used here is derived from the same pretty dire model (Xing? Blade?) also used in far too many converters, a primary offender being the TMPGEnc video encoder from back in the day. The actual Frauenhofer reference encoder, as used in Audition’s grandfather (CoolEdit), gives hugely better results (128k is tolerable if low-passed to maybe 14khz, 160k entirely acceptable at 16khz, 192k near transparent and would fool most people into thinking it’s mp3 if capped at 18khz), at the cost of speed. Not something that endeared it to anyone trying to do realtime encoding on a computer in the 90s, but dedicated hardware (DSPs etc) could have managed it, and a modern computer breezes it. No other program seems to have the same code available, but TooLame gets passably close. Certainly a lot closer than the really bad ones.

So the answer might be that they’re using a worthless encoder, which is pretty depressing given that there’s lots of different options still available… But even so, it doesn’t seem like they’re using all the available bandwidth.

And with AAC(+) it’s even more offensive… Honestly, if you can get 15 x 200khz stations into the ~20mhz of waveband available in band II, and those all spread out because of chaotic allocation back in the day (instead of doing it the TV way and bunching them together as much as possible), why is it so difficult to do it right with digital and at least 30mhz in band III?

Some great points Christian – thanks for taking the time to share them with us. To avoid clipping I generally normalise to 92% – maybe I need to reduce it still further!

Let’s hope that “Small Scale DAB” in the UK leads to sufficient DAB capacity to implement the kind of bit rates that you recommend! Our ears will benefit!

Why do you think Small Scale DAB isn’t used by BBC and the B.I.G commercial broadcasters?

Answer: To get a rugged DAB multiplex you need a really heavy RF-signal. And when it is a heavy RF-signal, with 500-20.000 watts, it isn’t anymore Small Scale DAB. BBC and the B.I.G commercial broadcasters know that.

With lower RF power you don’t get any rugged signal. It’s a joke…

Truth. I’ve got line of sight on a TV transmitter that can’t be more than five miles away, and it’s one of the strongest in the country … still need a signal booster to get completely reliable DVBT. That ol’ digital cliff is a nightmare. You’d barely even notice that much when it occasionally got a little snow on analogue, but that fuzz is death to a multiplexed digital signal, and the lower the signal strength in the cable the more trouble the receiver has error correcting it, even though the booster doesn’t actually regenerate any of the missing bits…

And that’s with a fairly beefy, correct-band digital aerial lined up in the right direction etc.

Now, crank it back to the kind of ERPs radio stations have vs TV, and consider the relatively comic size of a typical radio aerial… decent local transmission really doesn’t seem like a goer. Pretty certain one of the big “draws” for an eventual digital switchover for the big broadcasters is being able to centralise all the power in their hands and cause the final decline of pirate and ultra-local stations. Not that it really matters in the more distributed digital age anyway, but internet connections can be shut down… much harder to stop something that just floats through the air and doesn’t need any routing. Unless of course people don’t have the receivers for it any more, and there’s powerful digital fuzz coming through on most frequencies they’d use anyway.

Bit rubbish vs the kind of DX you can get on an AM signal in the middle of a lonely night, eh…

Opus is a free and superior alternative to AAC. In ABX testing using the default encoder settings, i could not distinguish it from lossless FLAC: http://www.opus-codec.org/

Thanks Cees. But I don’t think Opus is implemented in the DAB+ standard is it? Maybe one for DAB++!

Opus is a very good audio codec.

When I suggested around two years ago that Opus should be added to the international digital radio standards like DRM and DRM+, the guys behind todays AAC critisized it. They said that the patents around Opus are disputed.

Surely they would say that. The royalty on DAB+/DRM+-codec is generating a heavy income.

“France Telecom claims patent on Opus, gets rebuffed, with technical analysis of claims http://www.hydrogenaud.io/forums/index.php?showtopic=99310

AAC is just a different name for MPEG-4 audio, and essentially, in the broadcasting world, the Motion Picture Experts Group, their friends at the Frauenhofer research Group, and the standards they publish are God, Jesus, and the Holy Spirit.

Got something better you want to put forward for public airwave use? Too bad. “Not Invented Here”. Your better bet is to join the group, if you can, and slowly worm your ideas into MPEG-5…

It sucks, maybe, but that’s the way it is.

(Personally I kinda wish Sony hadn’t been such proprietary-IP-protectionist arses and opened ATRAC and particularly ATRAC-3 / ATRAC-S up more for general use. By the time they cottoned on it was far too late for it to gain much popularity… the older versions are only really as efficient as a half-decent MP2 encoder, but the later, much more refined ones – for which, of course, good quality realtime encoder hardware could be bought fairly affordably in the form of a minidisc deck – wiped the floor with MP3 and could probably have held their own against AAC before losing on a technicality… but they would at least have had the benefit of primacy…)

Once FM goes, that’s the end of broadcast quality. DAB at 192 sounds just about comparable to FM in my opinion, on most material, but it still trips up often enough on classical piano and complex textures to make an old Luddite like me reminisce about perfect all-analogue FM stereo in London before there was any encoding anywhere in the chain.

Now, on many a day, Radio Three DAB is down to 160 kbps (horrible) because of extra channels such as sport squeezed in, and Classic FM permanently to an unlistenable 128. One can only guess at what mastering engineers or musicians think of such travesty.

I agree with you to an extent Howard – but at the end of the day its a commercial world, so there needs to be a balance between cost and quality. Velvet ears like us probably want 256kbps or above, but Joe Public can’t tell the difference, so the compromise is lower than we’d like. I’d be interested to know when you heard an all analogue FM signal in London – when I was working there way back in late 1980’s the BBC TX’s were fed by PCM circuits running NICAM. Maybe earlier than that was analogue? Come to think of it, I think the IBA feeds were still analogue leased-line then though….and many just had peak limiters with no Optimod/Innovonics.

Hi Radiohead. Five months on and I’ve just caught up with this thread, sorry.

My ‘perfect FM’ dates back to 1960. First in mono and then (as confirmed by a BBC tech op) stereo through very finely balanced analogue lines between Broadcasting House and the Wrotham transmitter. When that link was switched to 13-bit PCM arounf 1972-3, quality plumetted! I recall my dad saying music sounded ‘woven’ – rather a good image for audio pixellation! Of course the rest of UK got something rather better through the new system than they had known up to then, and gradually the FM sound improved generally as, I suppose, better encoding was wheeled in.

I returned to this topic driven away from my DAB radio by, yet again, dreadful ear-grinding piano rendition at 160. When Ofcom muscle in on the BBC will they uphold better audio? Of course not. Just take a listen to Classic FM. No, don’t.

13 bit PCM represents a dynamic range of about 78dB which is probably fine for FM. The sample rate would have needed to be at least 32kHz to do justice to FM. By the time I was using leased analog lines the telco would digitize the audio between exchanges (in our case 32kHz,16bit which resulted in a bit rate of 512kbps or 8 x 64k circuits). A completely analog copper path over 20 or 30 kms is not going to be without it’s issues either.

Many engineers and programmers have tarnished the reputation of the Optimod, but the truth is these boxes today can work miracles to handle the endless problems associated with adding such an aggressive pre-emphasis curve to the audio you want to transmit. A simple peak limiter on a pre-emphasised audio signal will kill a huge amount of high frequency energy on de-emphasis. The genius of Bob Orban was to work out how to handle the overshoots introduced by pre-emphasis whilst maintaining brightness. It is not necessary to drive them into the kind of excessive limiting and clipping that many stations think is a good idea. The classical music presets in the latest generation of Optimods are more transparent than any other transmitter protection solution ever devised.

Interesting, I went off just to see if I could find the sampling rate and came back with more than I could have expected…

haitch tee tee pea etc (the board software thinks I’m spamming) bbceng.info/Technical%20Reviews/pcm-nicam/digits-fm dot aitch tee em ell

So, to pot that history, it’s a bit of a complex one. Essentially it starts out with the GPO running higher-than-telephone-quality leased lines much like Bell and AT&T did in the States, by joining two, four, eight of their analogue FDM channels together (or putting a higher frequency signal over the space they’d otherwise take, idk) down a typical multiplex line, providing about 7khz in mono for AM transmissions, and 15khz mono or stereo for FM (vs about 3.5khz mono for a single phone line) and basically whatever noise floor could be obtained within practical limits, which was about fifty-something dB. On top of which you have all the usual equalisation, interference, and other signal degradation problems of a long-distance analogue loop, even one that does have the signals on it modulated. Plus the crosstalk, different transmission efficiency and characteristics for each channel (or even L vs R / M vs S for stereo, high vs low frequency…), etc. Nightmare.

Into the frame comes the first PCM system, which oversamples slightly at 32khz (=16khz nyquist) and 13-bit depth, in mono or stereo (= equivalent of 416 or 832kbit/sec), and still multiplexes, upto 14 mono channels (well, 13 audio + 1 control – no idea what the obsession with 13s is all about) for a little under 6mbit/sec plus some basic error correction that apparently pushed it into the mid-6’s. Pretty decent broadband digital transmission for the mid-late 1960s…

(The signal was still rolled off at 15khz to avoid aliasing problems)

This shouldn’t have done anything other than improve the apparent quality, as the dynamic range at the receiving end was somewhere in the 60s of dB, and the linearity of response across the whole frequency range would have been much improved. No “bittiness” should have been evident, any more than with modern digital equipment – take a 16 bit recording, attenuate it to 12.5% linear (ie, lose the 3 LSBs), save it out (to make sure no clever-clever undo buffer stuff spoils the result), load it back in and amplify to 800%… listen back… does it sound “woven”? ğ

Later, a further development comes from wanting to use long distance TV transmission lines to also carry FM hidden within, in the “dead” parts of the horizontal blanking interval. By massaging the sample rate down to 31.25khz (= nyquist equal to TV scan rate, 15.625khz) and just using a steeper filter, and the fastest available bitstream encoders, they could fit 10-bit stereo in between everything else (including the TV signal’s own sound). Now, with that approximately equalling 60db, some might have thought that was still pretty good for the task in hand, but they took it a bit further, throwing in the concept of signal companding (a form of termporary dynamic compression often used, in the analogue realm, to bring the volume of the quietest signals of a wide-dynamic signal (eg a radio mic) up above the floor of a noisy link (eg the bodypack transmitter) so they’re not lost or affected by interference, stretching them back out again in the receiver for further distribution on better quality links) but adapting it to the digital realm. Adaptive companding in analogue systems might be used to give a sort of sliding window into the wider dynamic range of the original signal by stretching the peaks of a quiet passage up to fill the full capacity of the intermediate link, and allowing the quieter bits of a loud passage to be lost, as they’re then harder for the ear to detect anyway. And this digital system that was to become known as NICAM did much the same, though in discrete blocks (of something like 64 samples IIRC, so just over 2ms?) – taking a 13-bit, later 14-bit input signal (so, 78 or 84db approx), reading a block’s worth of sound into a buffer and determining the most significant bit actually used, windowing all the samples of that block between that bit level and MSB minus 9 (for a 10 bit output range) and adding in a minimal amount of metadata (like, maybe 10 bits if an additional sample, though ISTR it was less, and actually achieved in much the same way as with digital phone lines – by “stealing” certain LSBs from the transmitted stream, so it was overally actually “nine point something” bits (and PCM phone lines are 7-point-something, hence it’s not actually possible to shove 64kbits down them with a modem, and in fact user-spec modems which only have an analogue rather than digital ISDN connection can’t get more than 33.6k), with, say, 8 bits of data scattered across the 64 samples… 2 or 3 for the dynamic range (4 steps to say MSB=12,11,10,9 … extending to 8 needed a whole extra bit), a couple for channel mode (stereo, mono with only one pair of samples per line instead of two, twin language mono, mono + studio link), and maybe some others (stereo mode? eg L+R vs M+S… pre emphasis? 13 vs 14 bit original sample?).

At the decoder end, you read in 64 10-bit samples, strip the metadata into its matching registers (and either pad the LSBs with whatever the next sample’s state is, the opposite, or just leave it as 0…), dump the binary data into an output register with the appropriate amount of barrel shifting (0 to 3, or 0 to 4 bit spaces…) and have its channels either sent to the stereo transmitter or split up into mono pieces as specified… nice and simple, but it does mean the companding level, and so the ultimate noise floor, varies at a rate of about 500hz… if the original signal wasn’t as clean as it could be, with maybe the noise floor somewhere around the 11 to 12 bit level and useful signal managing to get down a little way into the fuzz, and the over-the-air transmission was good enough to reveal the fine minutiae of what came up the line, it’s entirely possible that the not quite entirely smooth varying of how much detail was in the noise (in louder passages, none at all other than the 10-bit quantisation error; in quieter passages, a lot softer and more natural, but also more obvious because the output LSBs were locked at 0 during loud parts then become chaotic as the actual signal drops somewhat…) could come across as an odd, not 100% perceivable, hard to define synthetic edge to the sound. “Woven”, as if it’s made of innumerate tiny, not entirely homogenous threads crossing over each other, could be a really good way of expressing it.

After all, it’s a really early type of perceptually-masked lossy compression at the end of the day. It only achieves a crunch of about 1.3 to 1.4x, rather than the 4x of even MP1, but everyone’s got to start somewhere. And as with all lossy compression schemes, you get just-audible artefacts when they’re faced with input-signal corner cases and the encoding rate is at the “critical” level…

(and, yes – NICAM was being used, even in the 70s, for radio, long before it was ever trialled for use in the TV signals that it piggybacked on… it was an entirely internal BBC thing at first, designed to keep most of the improved transmission quality of PCM radio but making it a little more efficient, and combinable with the TV signals they were now trunking anyway, so that they didn’t need entirely separate leased lines… though in some cases they did that anyway, in order to distribute PCM and NICAM signals together to sites in the middle of upgrading from one to the other; 12 PCM and 6 NICAM mono channels (or 6 + 3 stereo) fit almost perfectly into the commercial 8.4mbit data links they leased later in the day, a nice bump from just 13 PCMs, and more than the old scheme would have managed, without losing compatibility with existing kit by going completely NICAM)

All of which, however, confuses me some. I could swear blind that I have FM recordings, on tape or taken live from air into the computer, from the pre-DAB age where the dynamic range is clearly more than 15khz. Like, much more up towards the 19khz of the stereo carrier itself (which, yes, a good tape can just about capture, and even cheap ones can generally show something out towards 17; if it can’t manage even 15 then you know you’ve been played). Certainly to the 16khz ceiling of the typical realtime mp3 encoders I used at the time. But this would suggest that it was never so…

I wonder, am I imagining it? Is it artefacts from dodgy equipment (eg an old micro-system hifi and a computer almost literally cobbled together from scraps, though the soundcard was at least half-decent) and maybe even dodgier software? Or could it actually be that for the shortest of times the Beeb experimented with higher headroom levels on their FM broadcasts, eg by running just a slightly faster NICAM/PCM link? With similar filter shapes, 18khz only needs 20% more bandwidth than 15khz (e.g. 37.5khz sampling for NICAM, 38.4khz for regular PCM), and goes most of the way to filling in the gap between “ok for radio” and “about as close to CD as it’s practical to get”… Might even have increased the bit depth too. 1970s to late 90s/early 2000s is a long time after all.

Nowadays of course you’re lucky if it’s not just a rebroadcast of the bloody DAB stream. As in the *decoded* one… ğ

Sadly, it’s all getting worse and worse. The great majority of stations are now broadcasting MONO at 64K. Which sounds awful in anyone’s book. i suppose the nostalgic among us can be reminded of listening to Radio Caroline. As many have mentioned, radio broadcasting is just too expensive – and that is effectively driving it down to a rock-bottom service. Sadly, i don’t really see a solution to this. DAB+ will get squeezed just the same as current DAB has over the years. Maybe audio-only broadcasting is just dying out.

I’m tempted to go and dust off up that FM stereo transmitter I have in the garage…

I note your use of dbPoweramp to create the MP2 files simulating DAB. It should be considered that the dbPoweramp Layer 2 encoder does not support Joint Stereo. Although I don’t have a great deal of experience with listening to DAB, as we use DAB+ here, I would assume that at 128kbps stereo, most broadcasters would use Joint Stereo encoding, which would improve the perceived quality on a lot of content. Twolame probably represents the best performance the Layer 2 codec has managed to achieve.

I believe both Joint Stereo and full stereo are used here in UK – I don’t know why!

Because of hopeless engineers who have gone off and got a basic level diploma at college evening class, haven’t actually got that much interest in the area besides thinking it’d be cool to do something with radio like their favourite DJ, and haven’t any of the background knowledge or experience that comes with playing with this stuff hobbyist style, or professionally for many years?

So they just pick up whatever the cheapest encoder board, or possibly the first freeware PC-based system that they find, and use that, not knowing or bothering with anything beyond which button to push to make it go, selecting the bit rate, and keeping the levels between the green and red bits…

There’s maybe an argument in that a lot of MP2 encoders use *intensity* stereo when set to “joint” mode, which can sound a bit rough (I’m not sure I’ve ever heard a difference myself, though the “side” channel can look pretty badly destroyed if you convolute into M+S instead of L+R and then look at the spectrogram), even though others are entirely capable of using regular JS as well. So if the stereo image seems really messed up with their naff encoder set to JS (actually IS), they’ll move it up a notch to Separate Stereo instead… which still allows reallocation of bits between the channels to prioritise the one which has more complex material, but that’s about it. (The final choice is twin mono, which is simply just two regular mono channels packaged together, e.g. for multiple language streams where you don’t want there to be much bitrate interference, especially if they may later get split apart from each other and need to keep exactly half the combination bitrate)

When really what they should have done is get a better encoder in the first place, because if it’s defaulting to (or not allowing anything other) than intensity mode, chances are it’s making a right dog’s breakfast of the rest of the signal as well.

And of course some of them might not allow anything other than separate stereo in the first place… dear oh dear. So if you need to save some bits and not have it sound awful, the only option is dropping to mono. When, out in the non-broadcast world, good quality Joint Stereo is one of lossy audio compression’s most powerful weapons for delivering near-transparent results with practical bitrates. So long as you use a good encoder of course.

Or in short, it’s a matter of greed, ignorance, incompetence, and a lack of talent in the field, allowing dumb mistake to pile on top of lazy decision…

But anyway, I’m not intimately familiar with dBpoweramp, but dropping right down to 5khz frequency response at 80kbit stereo looks like the behaviour of a bad encoder to me. It should gracefully lose analogue bandwidth as the bitrate drops, in order to avoid really horrendous digital artefacting (as in the 12kbit AAC), but nowhere near that much – last time I saw such a weird combination of low bandwidth but stereo as well, it was a 32kbit MP3, and really you might hope to get slightly better from a decent encoder (like, 6khz… certainly 10khz is possible around 56kbit and 12khz stereo at 80kbit mp3 with reasonable artefact levels, with a sliding scale of improvement in-between). Even if mp2 is only half the efficiency of mp3 (and I’d say really it’s more like 2/3rds), the output should be more wideband than that.

One thing that strikes me as missing is the spectrograph image for 128k mp3, and anything at all for 192k. Those would be the clinchers, as I can pretty much *eyeball* a good vs bad encoder at those rates, in mp2 and mp3, just from the spectrums. Though I have to say the 80k mono result (which should be roughly equivalent to 128k with passable joint stereo) doesn’t give me much confidence given how harshly it rolls off the treble itself. As noted above, a fairly consistent 14khz pre-encoding (not in-the-encoder) lowpass filter seemed to give acceptable output with a good encoder at 128k (I did a lot of those for VideoCDs and such…), and that was with it appearing more like the FM signal, just a kilohertz lower…

Anyway, I was going to go onto the AAC, but I have to get up from the PC now ğ

As a 70+ year old, I recall experiencing early sixties BBC Third programme stereo

and was blown away by the high quality audio, which largely continued through the sixties. I also greatly admired Dutch radio sound quality. German broadcasting was good to but for the fact a lot of compression was used, resulting in that heavy bass sound so beloved in that nation. Intelligibility however was very good largely down German ARD boosting amplitude around, I think, the 8khz level.

In those early ’60s days though, the signal transmission from studio to transmitter here in the UK was dire, relying far too much on poor, heavily compressed Post Office controlled land lines. PCM sorted that out immediately it was introduced.

But I can but only agree totally with comments on contemporary radio broadcasting standards: from the moment CD- sampling quality became challenged, both in the home ‘hi-fi(!)’, in broadcasting, and especially on-the-move, it has been a flight to failure for high quality audio. Tragic in fact, especially when one realises the efforts sound engineers still go to to balance and record concerts and discussions.

Here in Australia we are suffering the same fate. The ‘efficiency’ of AAC+ used on DAB+ was used to downgrade the average quality to 64KBit/s – at best – and it sounds tinny or metalic for music stations. It’s a terrible tragedy. I see digital radio going the same way as broadcast TV for the same reason – better quality – and diversity – online.

Shhhhhh, don’t tell people that AAC+ on digital radio sounds bad. The broadcasters are trying to convince everyone it sounds fantastic!

Better than CD I have even heard some of them say!

I have never heard any dab+ emission better than FM… Classical music just sounds “dead” on Dab+ (vs Akai AT 93L (fm)

At 73 now, I am disappointed with developments like DAB radio. I come from the recording industry where we used 16bit 48kHz PCM formats from 1983 and later evolved to 192kHz/24bits/s recordings. At the time radio already was going to lower audio standards, for instance in the late 90’s the archive was stored on a compressed format in the Dalet system at Dutch radio. reasons: cost and storage space. Quality was less important. That same trend was followed-up by DAB+ sound quality for transmission. I know from my personal experience that in order to obtain good quality stereo quality you needed between 256 and 320kb/s AAC streams. Now I saw that 64kb/s is used for DAB and that includes the meta data!

My conclusion is that radio transmission quality is no longer important for high quality audio, since the broadcasters go for quantity and services for mobile use.

For real high audio quality the consumer must use recorded audio on BlueRay discs, SACD, QMS etc. Most people don’t seem to care anymore, since it is all about the money. The pleasure and joy of listening to a high quality audio recording, made by people who really love music, is only for a small grouyp of people who value it. Radio broadcast slowly faded itself out of this realm.

Gert Vogelaar

I believe most broadcasters go for “good enough” quality. Where “good enough” means an average pair of ears, listening on average audio equipment and in average noisy environments. I think they strike a pragmatic balance most of the time, but not when they also cut corners on other parts of the transmission chain, and the end result of chaining more than one codec sounds awful! The strangest thing I find is the commercials going mono – sounds really flat to me, but according to industry “thought leaders” no-one notices the difference between mono and stereo!

Most broadcast AM where I live in Australia is 24kHz wide (12kHz audio). Better receivers go up to 20kHz, though they are hard to find.

80k MP2 mono sounds like nearby AM stations, execpt with “pre-echo”. 80k MP2 stereo sounded like a cheapy portable radio.

56k AAC had a loss of stereo image, but otherwise was ok. There was more hiss on the vocal components and voice over, which reminded me of FM Stereo.

32k AAC reminded me of an over-driven small speaker. The other low bit rate AAC’s were muffled, and had a “waterfall noise”.

AAC SBR works by using selective “aliasing” to reconstruct higher frequencies, which works for speech, but is not designed for music.

I get reasonable sound quality on the FM Band on my Yamaha RX-V390RDS, which I bought in the mid 90’s. I used to listen to BBC Radio a lot until the departure of Ken Bruce.

A while ago I bought a Tibo TI420, from a well-known auction site (ebay), I only listened to Radio 6 Music when an artist I like was being interviewed, but the problem with that was the signal strength has to be really good otherwise it would make weird noises, the supplied aerial is simply not good enough.

At one time there were adverts several time a day on Radio 2 telling people to upgrade to DAB because “it’s better”.

So now I find it’s not better at all; in fact because so many stations have been crammed into the bandwidth (as pointed out by Tahrey), quality is severely reduced. You can certainly notice the poor quality of stations that run at 32kbps, or indeed anything less than 128kbps – it’s difficult for me to listen to such atrocious quality. At least R6Music runs at 128Kbps, 256kbps should be the standard, but I don’t know if my DAB supports that.

More recently DAB+ was brought out, I thought perhaps now we’ll get better quality but this does not seem to be the case; they’ve used more efficient Compression Techniques so they can cram even more stations in and sell more licenses. The quality needs to be improved and if they want more stations we need more bandwidth, sadly if they did that, receivers like mine would be obsolete.

I notice that a lot of CD’s have been cobbled so that there is no real bass, and some just don’t sound good at all. Funny that we’re now being told that vinyl is quite good or has a “warm” sound, but yet in the early eighties CD’s were being promoted as being a superior format, of course the CD should not have been developed until 32 bit audio was available instead of being launched in 16 bit. They did try launching the SACD a while back but it didn’t really take of, I think the players and the discs were too expensive.

CD’s are slowly going out of fashion, even some £Shops no longer stock them and those that still don’t have many.

Yes, as David pointed out: It’s a race to the bottom, I’m sad to say.

Very interesting discussion. I am also bitterly disappointed at the lost opportunity with DAB and DAB+ The notion that we either want or need all these pointless channels has not come from the listening public but from the suppliers.

From an audio engineer’s perspective, a similar tragedy has occurred with the almost complete loss of true stereophonic recording. Everything seemes to be multi-miked and mixed down artificially, losing all the attendant phase and natural reverberation information. So we get an artificial rendition of what might have been a wonderful musical performance. Then, if that is not bad enough, it is further mangled by broadcast radio. It saddens me that most youngsters growing up now will never hear good quality audio, or understand what it is. Tragedy is not a strong enough word for it.